Business

Driving Real Business Outcomes with AI

How MasterBorn generates multi-million dollar opportunities for our clients with outcome-focused AI implementations

We see a huge number of white papers and articles about failed AI implementations. But rarely ones showing how to generate real business outcomes with AI/GenAI.

Over 15 years in digital transformation, I've helped more than 40 organizations implement technology at scale and deliver millions in cost savings and new revenue with outcome-focused AI implementations. Here at MasterBorn, on a daily basis I’m advising our customers on how to achieve tangible business results.

Our last year includes AI success stories such as $4M cost savings for a supply-chain SaaS platform and a multi-million profit opportunity using a responsible AI strategy for a MedTech organization

In this article, I'll share the framework we use at MasterBorn to generate such results, based on our years of hands-on experience across diverse industries.

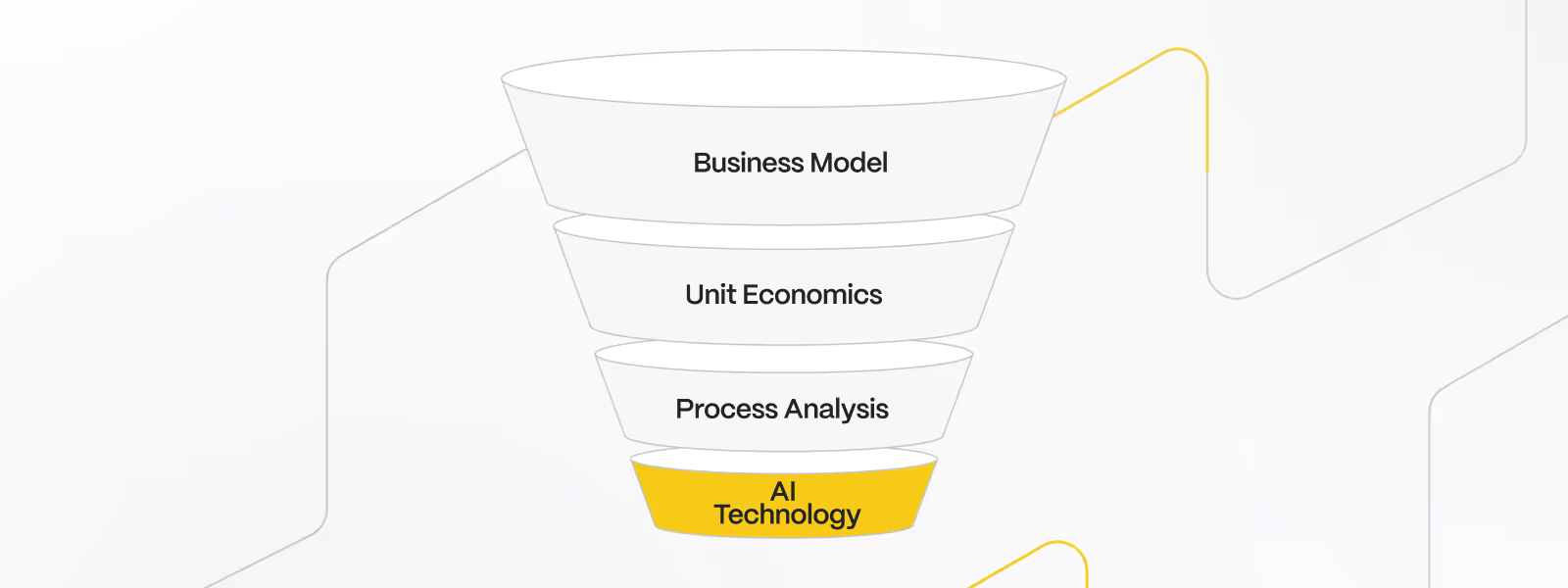

When thinking of outcome-focused AI projects, we start with economics and end with technology

A company’s P&L is a map of AI opportunities: every P&L item represents a process that either costs money or makes money. AI can optimize both but only if there is a good understanding of economics first.

Why the typical approach fails:

Most teams start by asking “What can AI do for us?" This leads to:

Our approach:

We start with the business model and ask:

Then ask: "Could we change these economics with AI?"

This changes the way we think of a whole project: implementation success metric becomes financial outcome, not technical achievement. Project timeline becomes "what's the fastest path to ROI" instead of "what's the coolest thing we can build”.

The north star principle:

AI doesn't create value by automating tasks. It creates value by changing the unit economics of your business model.

This thinking differentiates companies building another chatbot from those building competitive advantages.

Before prototyping anything, let’s measure the problem.

We've seen so many teams build sophisticated AI solutions for problems they never properly quantified. Later on, they can't prove ROI because they never established a baseline. It’s crucial to understand the current state economics - this becomes both a business case and a success benchmark.

How to do it:

We spend our 1-2 weeks of discovery on:

1. Identifying the right metrics:

We typically look at the process related metrics, such as:

2. Agreeing on minimum acceptable success rate:

The critical step that a lot of people skip is that GenAI is probabilistic - it won't be perfect. But fortunately, there is a way to determine what accuracy is actually needed to make it profitable.

Let’s see how to calculate the acceptable success rate on a simplified example:

This number then becomes the project's success threshold. With that in mind, we just de-risked the entire initiative - even if the AI implementation doesn't achieve 100% accuracy, we now have a baseline of minimal accuracy to make the project profitable.

An ACME Insurer explored how AI could improve claims processing. Today, the company handles 340,000 claims a year at a total cost of $20M. Instead of proposing full replacement of human staff, the goal was to test an AI-assisted model - with humans remaining as the experts (human in the loop).

Proposed AI-enhanced workflow:

Assumptions for modeling ROI:

Financial model:

This is then a business case that can get executive sign-off as we just showed real dollar opportunity and an “AI accuracy margin” that we may use to define project success.

Here we can also notice that the AI-enhanced workflows are reducing cost, and we can offset that time to handle more using the same headcount - in the best case, unlocking opportunity for new pure profit generation.

Teams commonly build financial models based on "if everything works perfectly" scenarios. Always model your effectiveness breakeven point, then treat anything above as an upgrade. This protects the project and makes wins easier to celebrate.

The best AI implementations keep humans in control - and there's a crucial technical reason why: GenAI is non-deterministic.

Here's a critical lesson from AI implementations across industries: "fully automated" systems often work beautifully in PoCs but make wrong decisions in production that later on, humans struggle to catch. We see success in AI where we target processes that by nature are non-deterministic.

Apply GenAI where humans already operate probabilistically:

Avoid:

The architectural pattern that works:

The most successful AI systems follow the pattern of “AI suggests → Human decides”.

In practice, this means a typical workflow needs explicit human approval. The AI generates a recommendation with a confidence score and clear reasoning for recommendation traceability. The system shows it to the appropriate reviewer based on complexity and confidence. Human expert can then approve, modify, or reject.

This requires both UI aligned with the “human in the loop” approach and also an underlying architecture to support process continuity. From the technical perspective, the system must be built to pause, present a probabilistic result for human review, capture the correction, and then commit the final transaction - often this is implemented as a “state machine”.

Following a successful business case (from Pillar 1), the ACME Insurer moved to implementation with a critical architectural decision: how to manage claims with the AI-enhanced workflow while keeping humans in the loop.

Implementation:

When a claim arrives, the AI system:

Why was augmentation architecture critical?

The claims processing is by nature probabilistic - even experts sometimes disagree on final classification. By keeping humans in the loop:

Don't design for 100% automation on day one. It creates organizational resistance, can't handle edge cases gracefully, and provides no feedback loop for improvement.

Technology is the easier part but the organizational change process is where most AI projects struggle.

Based on our experience, most failed AI initiatives had working technology however they failed because people didn't use them or leadership didn't promote them.

That’s why early in the discovery process we engage with key stakeholders:

Finding internal champion:

We also identify someone with organizational credibility who will act as the first power user. They'll adopt the AI solution early, validate its business value and become advocates - driving adoption across co-workers.

We then execute in phases:

Phase 1 - PoC (4-6 weeks):

Phase 2 - Pilot (1-3 months):

The pilot is a key phase for success. A pilot tests operational reality - including legacy data, scalability (concurrent transactions), and edge cases.

Phase 3 - Scaled Rollout (3-6 months):

Engineers often build the perfect PoC but forget about the adoption. This results in a technically great prototype…that nobody later uses.

Optimize for performance and accuracy, but at the same time don’t miss usability, explainability, and integration with existing workflows that make real users trust and adopt the new AI system.

Here’s the summary of what successful AI projects really involve, because the reality can be slower and messier if not planned at all or planned wrong.

Winning approach: small steps, not big changes. Focus on business outcomes. Pick one process, prove it works, build trust, expand, and improve over time.

Why most teams fail: wrong expectations from the start. Teams want 2-month delivery to production, perfect accuracy and AI running by itself. Then, they end up with 6-12 month projects, 70-85% accuracy but major challenges with AI adoption across the organization.

Technology is rarely the problem, not thinking about the end value and the overall organization is what will likely kill the project.

Partner with world-class experts who understand your challenges, deliver seamless solutions, and support you every step of the way.